The goal of this article is not to explore (in depth) what Airflow is. Instead, is to provide insights on a relationship between capacity SKU and Airflow pool types.

Capacity planning and forecast is a very important so that you don’t risk throttling it.

In the case of Airflow jobs (pricing), you pay for the pool uptime, therefore it is very straightforward to forecast, and to pick an appropriate size, so as not to hinder other workloads.

In short, Airflow job is the Microsoft Fabric’s offering of Apache Airflow, enabling users which prefer orchestrating activities via code, or who just want to complement their orchestration needs.

In order to run a job, you need to have a pool. There are 2 types of pools: starter and custom.

Starter pools is better suited for dev purposes, and will shutdown after 20 minutes of idleness.

Custom pool’s biggest selling point is for scenarios which require pools running for 24/7 – “always-on”.

The pricing model distinguishes 2 sizes of jobs: small and large, being translated into different CU consumption rates, respectively, 5 CUs and 10 CUs.

The size choice must be iterative, as the only reference there is in public docs is the following:

- Compute node size: The size of compute node you want to run your environment on. You can choose the value Large for running complex or production DAGs and Small for running simpler Directed Acyclic Graphs (DAGs).

…having not found, at the time of writing a way of defining a simple or complex DAG.

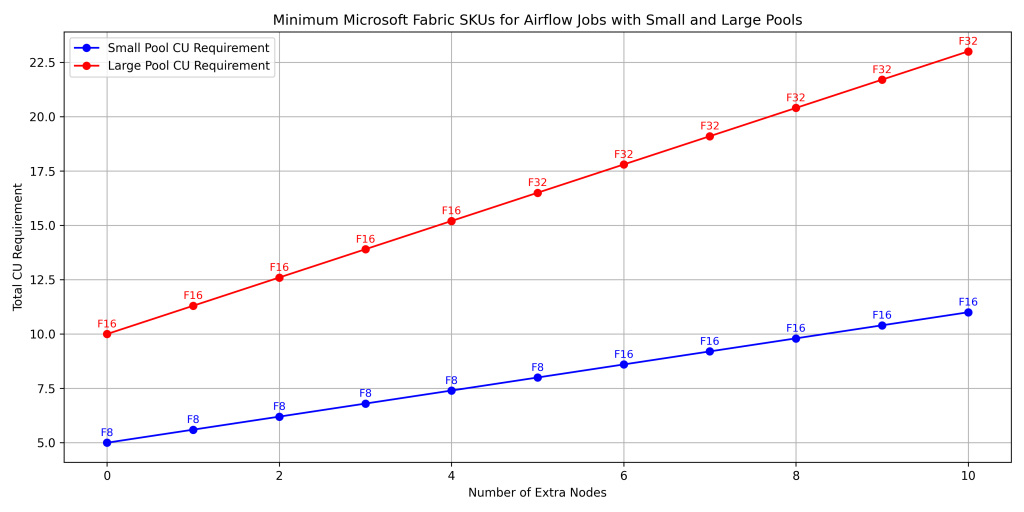

Follows an exercise to enumerate all possible pool configurations:

| Pool Type | Extra Nodes | Total CU Requirement | Minimum Valid SKU |

| Small | 0 | 5.0 | F8 |

| Small | 1 | 5.6 | F8 |

| Small | 2 | 6.2 | F8 |

| Small | 3 | 6.8 | F8 |

| Small | 4 | 7.4 | F8 |

| Small | 5 | 8.0 | F8 |

| Small | 6 | 8.6 | F16 |

| Small | 7 | 9.2 | F16 |

| Small | 8 | 9.8 | F16 |

| Small | 9 | 10.4 | F16 |

| Small | 10 | 11.0 | F16 |

| Large | 0 | 10.0 | F16 |

| Large | 1 | 11.3 | F16 |

| Large | 2 | 12.6 | F16 |

| Large | 3 | 13.9 | F16 |

| Large | 4 | 15.2 | F16 |

| Large | 5 | 16.5 | F32 |

| Large | 6 | 17.8 | F32 |

| Large | 7 | 19.1 | F32 |

| Large | 8 | 20.4 | F32 |

| Large | 9 | 21.7 | F32 |

| Large | 10 | 23.0 | F32 |

In a more visual way (with the help of Copilot):

Observations – for a 24/7 uptime:

- For small pools, the minimum SKU size is F8, with no extra nodes.

- For large pools, the minimum SKU size is F16, with no extra nodes.

(minimum SKU size to avoid capacity throttling – assuming this as the only workload using the capacity)

Analogously, lower SKU sizes can be used with starter pools for experimentation, exploration – dev purposes.

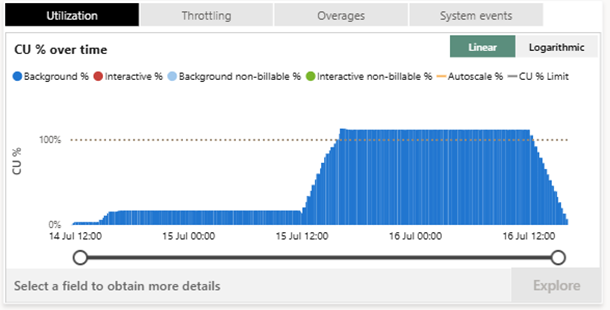

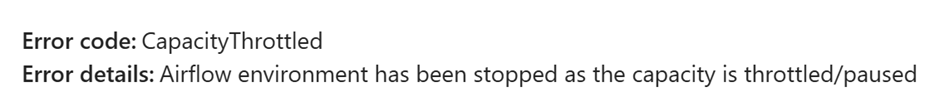

Example of what might happen, from Fabric Capacity Metrics app perspective, if you don’t consider the graphic shown above:

In short, not all Capacity SKUs support all the possible combinations of pool composition (in terms of size and extra nodes) – at least if you plan to use it 24/7.

Thanks for reading!

References

https://learn.microsoft.com/en-us/fabric/data-factory/apache-airflow-jobs-concepts

Apache Airflow Job workspace settings – Microsoft Fabric | Microsoft Learn